We are swimming in alternative facts and newspeak , lies posing as truth and conspiracy theories intended to distort reality, or so it seems. This is not new but appears to be greatly amplified of late and its creating a scary dilemma for our democratic society. And more than that, it is exposing a puzzling paradox deep in the human psyche, something we dearly need to understand. Consider this familiar example.

On the first day of the Trump administration, press secretary Spicer responded to unfavorable press accounts by claiming, “This was the largest audience to ever witness an inauguration — period — both in person and around the globe.” This was blatant gaslighting, an attempt to spread misinformation in the face of abundant evidence proving the statement unquestionably false. Silly propaganda for sure, but it also set the stage for a terrific sociology experiment.

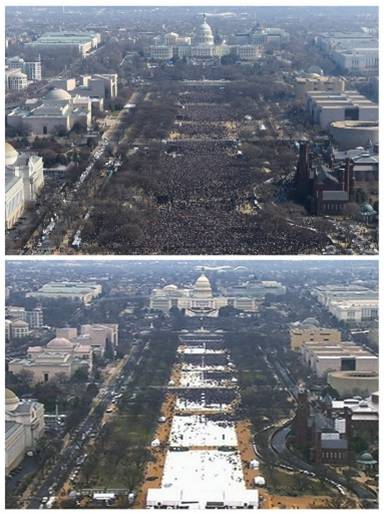

Neuroscientists picked up on the story and saw an opportunity to study the neural mechanisms involved in protecting our most strongly held beliefs against counter evidence. They used the now famous crowd photos from the Obama and Trump inaugurations to ask this question, “How far are Trump supporters willing to go to accept his administration’s argument?”

The photos were retweeted on a National Park Service Twitter account. The next day the Trump administration ordered the Park Service to suspend the account.

The photos were retweeted on a National Park Service Twitter account. The next day the Trump administration ordered the Park Service to suspend the account.

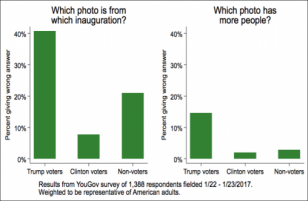

In the two days after the inauguration, they surveyed 1,388 adult Americans. Half were shown unlabeled crowd pictures from both the Obama (upper) and Trump (lower) inaugurations and asked which was from Trump’s day and which from Obama’s. This is what they expected to find, “If the past is any guide, we would expect that Trump supporters would be more likely to claim that the picture with the larger crowd was the one from the Trump inauguration, as doing so would express and reinforce their support for him. Further, as some respondents had never seen these photos, uncertainty regarding the answer would likely lead them to choose the photograph that would be most in line with their partisan loyalties.” The other half of the people in the survey were simply asked “Which photo has more people?”, a question with only one correct answer.

They wondered, “Would some people be willing to make a clearly false statement while looking at clear photographic evidence simply to support the Trump administration’s claims?” The answer is yes. The figure below shows the percentage of people who gave the wrong answer to each question. In both cases, people who said they had voted for Trump were significantly more likely to answer the questions incorrectly than those who voted for someone else or did not vote at all.

What is truly amazing is that 15 percent of Trump voters said that the crowd of people was larger in the lower image (Trump) than in the upper image (Obama). It seems crazy that with photographic evidence right in front of their eyes, one in seven Trump supporters gave the incorrect answer in defiance of their senses. Why would anyone do this? That is the paradox I want to address and it concerns something called expressive responding – people will choose something they don’t believe rather than give up on a previous belief. Clearly, the photos placed some voters in a tough spot. An article by Brian Resnick writing for Vox suggested a way to understand this. His subtitle frames the topic perfectly; “Why we react to inconvenient truths as if they were personal insults”.

People often resist changing their beliefs when directly challenged, especially when those beliefs are central to their identity. Resnick suggests that partisan identities get tied up in our personal identities. If that is so, it would mean that an attack on a strongly held belief is an attack on the self and the brain is built to protect the self. He says, “We protect the integrity of our worldview by seeking out information to confirm what we already know and dismissing facts that are hostile to our beliefs.” That could go a long way to explain what we saw at political rallies last year, as well as the strange resistance to facts about climate change.

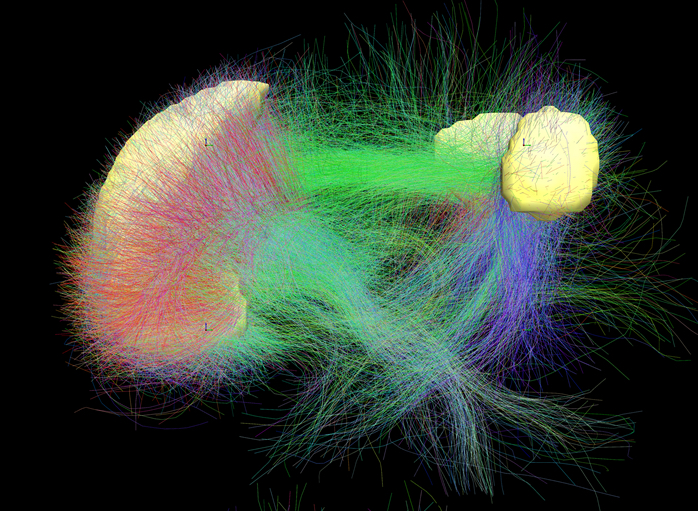

Resnick’s article draws on research by Jonas Kaplan, Sarah Gimbel & Sam Harris. They took 40 people who identified themselves as liberals with “deep convictions”, put them in a fMRI scanner and started challenging their political beliefs. They were looking for the neural systems involved in maintaining belief in the face of evidence to the contrary. What they observed in these people was increased activity in the default mode network, a set of interconnected brain areas associated with self-identity and disengagement from the external world. They also found that areas of the brain involved in emotion regulation were activate by challenge. These are places we can go to defend ourselves from threats. It is a form of internally directed cognition.

Default Mode connectome (forebrain, yellow, to the rt.) showing connections between regions actived when thought is internally directed. Connectivity increases throughout life, suggesting increasing tendency toward preconception.

The implication from the study is that we tend to mistake ideological challenges for personal insults. Kaplan phrased it this way, “The brain’s primary responsibility is to take care of the body, to protect the body. The psychological self is the brain’s extension of that. When our self feels attacked, our [brain is] going to bring to bear the same defenses that it has for protecting the body.” I take that to include the adrenaline rush of the fight-or-flight response and all the stress that produces, hence the name calling and sometimes violent expression at campaign rallies.

A tool for combating fake news

Inaccurate beliefs are tenacious, so how do you go about gracefully introducing empirical facts into a polarized discussion in the hope of shifting opinion? We all know what doesn’t work, but a new study describes an approach to counter fake news with better results.

Vaccines work by exposing the immune system to a weakened version of the threat in order to build tolerance. A similar logic can be applied to help “inoculate” people against misinformation. It is not a substitute for critical thinking skills, but it is a help.

Take climate change for example, a topic totally polarized by the damaging influence of fake news propagating myths. Researchers from Cambridge, Yale & George Mason universities compared reactions to a well-known climate change fact with those to a popular misinformation campaign contending that there is no consensus among scientists; a false statement. When presented consecutively, the false statement completely cancelled out the fact (there is nearly complete consensus) in people’s minds. Fake news was having a good day.

Next, they added a small dose of climate change fact before the delivery of misinformation. For example, they briefly introduced people to the distortion tactics used by the fossil fuel industry. This inoculation helped shift and hold opinions closer to the truth – despite the subsequent exposure to the industry’s fake news. The inoculation technique shifted the climate change opinions of Republicans, Independents and Democrats alike.

“Misinformation can be sticky, spreading and replicating like a virus,” says lead author Dr Sander van der Linden, a social psychologist from Cambridge and Director of the Cambridge Social Decision-Making Lab. “We wanted to see if we could find a ‘vaccine’ by pre-emptively exposing people to a small amount of the type of misinformation they might experience. A warning that helps preserve the facts. “The idea is to provide a cognitive repertoire that helps build up resistance to misinformation, so the next time people come across it they are less susceptible.” Give this a try and let me know how it works out.

– neuromavin