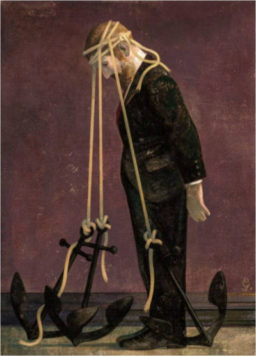

Artist: Gérard DuBois

America is deeply polarized and divided. Perhaps it has always been, but it feels like the restraints normally imposed by reason are broken. I think that at the core of the problem is something psychologists call myside-bias or confirmation bias. It is our abiding tendency to search for, favor and interpret information in a way that confirms our preexisting beliefs while giving little credence to alternative views. We are all susceptible and it is dangerous because it leaves us vulnerable to manipulation. Online search and the 24hr news cycle make it easy to fall into this unproductive cognitive trap. And, of course, myside-bias is strongest for emotionally charged issues and deeply entrenched beliefs, for example those involving religion, race and politics. It means that people will interpret ambiguous or even blatantly false evidence as supporting their position because it is just easier to do it that way. In fact, news sources offer varying accounts of the very same event in a fashion perfectly suited to amplify social polarization. Basically, people can’t think straight and reasonable persons are sometimes totally irrational. How did we come to be this way?

Elizabeth Kolbert, wrote a thoughtful article for The New Yorker entitled, Why Facts Don’t Change Our Minds. I am going to paraphrase much of it here and a lot of the research for this piece is hers. I give credit to Elizabeth for what follows.

Origin of myside-bias and the tribal narrative

Kolbert points to a book, The Enigma of Reason, written by two cognitive scientists, Hugo Mercier & Dan Sperber. They contend that reason is an evolved trait, like bipedalism, that emerged on the savannas of Africa and should be understood in that context. Their argument goes like this; humans’ biggest advantage over other species is our ability to cooperate. Reason developed not to enable us to solve abstract problems or draw conclusions from unfamiliar data. Rather, it developed to resolve the problems posed by living in collaborative social groups. From this perspective, myself-bias is about adopting a narrative that identifies us as a member of the tribe in good standing, justify our actions to the tribe and win arguments. This is particularly useful when there are other quarrelsome tribes nearby. One could argue that our fractious culture is still tribal in nature.

The authors point out that humans aren’t uniformly uncritical. Presented with someone else’s argument, we’re quite adept at spotting the weaknesses. Instead, the beliefs we’re blind to are our own. This lopsidedness reflects the task that reason evolved to perform. Living in small bands, our ancestors were primarily concerned with their social standing, and with making sure that they weren’t the ones risking their lives on the hunt while others loafed around the camp. There was little advantage to abstract reasoning but much to gain from winning arguments. Our ancesters didn’t have to contend with alternative facts, fake news, Twitter and such. It’s no wonder, then, that today reason often seems to fail us. As Mercier & Sperber write, “This is one of many cases in which the environment changed too quickly for natural selection to catch up.”

In their book, The Knowledge Illusion: Why We Never Think Alone, Steven Sloman & Philip Fernbach observe that people believe that they know way more than they actually do. What allows us to persist in this belief is other people, through what they call a community of knowledge. We rely on the expertise of others, something that must go way back to hunter-gatherer days. This brings the risk that if you are not careful you might confuse fake news for fact. When we divide cognitive labor in this way there is not a sharp boundary between one person’s ideas and those of others. Of course the sharing of ideas is the way technology grows and crucial to what we consider progress, but it almost guarantees that progress will be a bumpy ride.

The community of knowledge can be problematic when it comes to politics if we place too much faith on what we hear. Sloman & Fernbach asked people for their stance on questions like: Should there be a single-payer health-care system? Or merit-based pay for teachers? Participants were asked to rate their position depending on how strongly they agreed or disagreed with the proposal. Next, they were asked to explain, in as much detail as they could, the impacts of implementing it. This is where most people ran into trouble; they found they actually didn’t know much about the issue. Asked once again to rate their views, they ratcheted down the intensity, so that they either agreed or disagreed less vehemently. The participants were happy with their bias until asked to explain it. Uncritical acceptance of the party line had betrayed them. It is important to recognize that this has little to do with intelligence but a great deal to do with education.

A clear demonstration of confirmation bias was made at Stanford. Students were placed in two groups based on their opinion about capital punishment. Half the students were in favor of it and thought that it deterred crime. The other half were against it and thought that it had no effect on crime rate. Both groups were asked to read two articles. One provided data in support of the deterrence argument and the other provided data that called it into question. Both articles were fictitious but had been designed to present what were, objectively speaking, equally compelling statistics. The students who had originally supported capital punishment rated the pro-deterrence data highly credible and the anti-deterrence data unconvincing. The students who’d originally opposed capital punishment did the reverse. At the end of the experiment, they were asked once again about their views. Those who began pro-capital punishment were now even more in favor of it; those who had opposed it were even more hostile.

Confirmation bias is everywhere

One distinguishing feature of scientific thinking is the search for falsifying as well as confirming evidence. You can think of this as an effort to limit confirmation bias. History tells us, however, that there is a strong tendency for scientists to resist new discoveries or contradictory evidence by selectively ignoring data that bucks the current consensus. To combat this, scientific training teaches ways to minimize bias and peer review is there to mitigate the effect, even though the peer review process itself may be susceptible to bias. Worse, data that conflict with the experimenter’s expectations may be discarded as unreliable and never see the light of day. This is especially troublesome when evaluating nonconforming results since biased individuals may regard opposing evidence to be weak in principle and give little serious thought to revising their beliefs. Scientific innovators often meet with resistance from the scientific community and research presenting controversial results frequently receives harsh peer review. I think that the US congress may suffer from similar tendencies.

Myside-bias is hard to avoid even for well-meaning people. To me the strangest thing is that people will profess that they “don’t believe in science” (or evolution or climate change) when in truth belief has nothing to do with it. Providing these people with accurate information does not help, they simply discount it. It appears such people suffer from abnormally high bullshit receptivity. Sara Gorman & Jack Gorman address this in their book, Denying to the Grave: Why We Ignore the Facts That Will Save Us.

Myside-bias and the brain

There has been progress in understanding the neurobiology of myside-bias. People appear to experience genuine pleasure, a rush of dopamine, when presented with information that supports their beliefs. It feels good to ‘stick to your guns’ even if we are wrong and it feels good to be in a like-minded crowd. Once again, its the tribal narrative, there is comfort in the party line that identifies us as a member of the group. We see this acted out in spades at political rallies these days.

A study of myside-bias using brain imaging was done during the 2004 U.S. presidential election with participants who reported having strong feelings about the candidates. They were shown apparently contradictory pairs of statements, attributed either to Republican candidate George W. Bush, Democratic candidate John Kerry or to a politically neutral public figure. They were also given statements that made the apparent contradiction seem reasonable. They then had to decide whether or not each of the three individual’s statements were inconsistent. The result: Participants were much more likely to interpret statements from the candidate they opposed as being contradictory.

The participants made their judgments while being observed with fMRI imaging to monitor activity in different brain regions. When they were asked to evaluate contradictory statements by their beloved candidate, emotional centers of their brains were activated. This did not happen with the other candidates. It is apparent that the different responses were not due to passive reasoning errors. Instead, the participants were actively reducing the cognitive dissonance induced by reading about their favored candidate’s irrational or duplicitous behavior. When confronted with inconvenient data, the participants reacted by entering into default mode, closing off outside information as a way to protect their strongly held belief. This may explain Trumpism and social polarization, at least in part.

All of this brings to mind a kind of meta-incompetence called the Dunning-Kruger effect, perhaps you know of it. It refers to a cognitive bias in which a person suffers from the illusion of superiority, mistakenly assessing their knowledge or mastery of the facts as much greater than it really is. It has a flip-side, high-ability individuals may underestimate their relative competence and erroneously assume that tasks that are easy for them are also easy for others. I think myside-bias may be explained in part by the Dunning-Kruger effect.

In the stressful world we live in, with the noisy background of alternative facts and little time to process information in depth, myside-bias emerges as something dangerous. It may be an old and deeply entrenched part of human nature, but no one has yet found a way to overcome it for the good of the global society. Others recognized the problem, for example Bertrand Russell – “One of the painful things about our time is that those who feel certainty are stupid, and those with any imagination and understanding are filled with doubt and indecision”. Also Shakespeare -“The fool doth think he is wise, but the wise man knows himself to be a fool” (As You Like It).

-neuromavin